Rename second-edition subfolder to `edition-2

@@ -1,4 +0,0 @@

|

||||

+++

|

||||

title = "Second Edition"

|

||||

template = "redirect-to-frontpage.html"

|

||||

+++

|

||||

@@ -1,7 +0,0 @@

|

||||

+++

|

||||

title = "Extra Content"

|

||||

insert_anchor_links = "left"

|

||||

render = false

|

||||

sort_by = "weight"

|

||||

page_template = "second-edition/extra.html"

|

||||

+++

|

||||

|

Before Width: | Height: | Size: 287 KiB |

@@ -1,109 +0,0 @@

|

||||

+++

|

||||

title = "Building on Android"

|

||||

weight = 3

|

||||

|

||||

+++

|

||||

|

||||

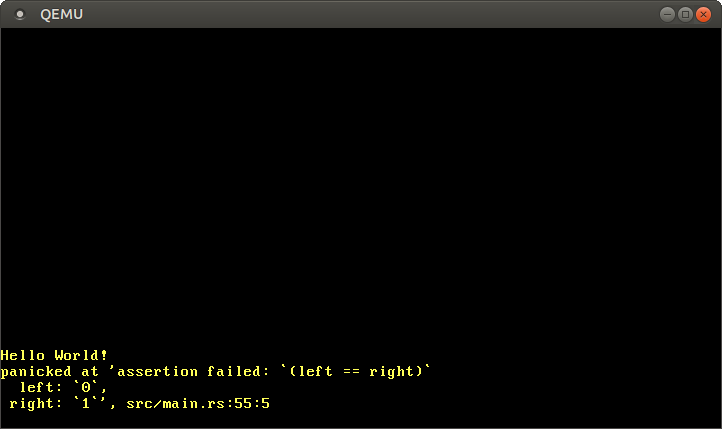

I finally managed to get `blog_os` building on my Android phone using [termux](https://termux.com/). This post explains the necessary steps to set it up.

|

||||

|

||||

<!-- more -->

|

||||

|

||||

<img src="building-on-android.png" alt="Screenshot of the compilation output from android" style="height: 50rem;" >

|

||||

|

||||

|

||||

### Install Termux and Nightly Rust

|

||||

|

||||

First, install [termux](https://termux.com/) from the [Google Play Store](https://play.google.com/store/apps/details?id=com.termux) or from [F-Droid](https://f-droid.org/packages/com.termux/). After installing, open it and perform the following steps:

|

||||

|

||||

- Install fish shell, set as default shell, and launch it:

|

||||

```

|

||||

pkg install fish

|

||||

chsh -s fish

|

||||

fish

|

||||

```

|

||||

|

||||

This step is of course optional. However, if you continue with bash you will need to adjust some of the following commands to bash syntax.

|

||||

|

||||

- Install some basic tools:

|

||||

```

|

||||

pkg install wget tar

|

||||

```

|

||||

|

||||

- Add the [community repository by its-pointless](https://wiki.termux.com/wiki/Package_Management#By_its-pointless):

|

||||

```

|

||||

wget https://its-pointless.github.io/setup-pointless-repo.sh

|

||||

bash setup-pointless-repo.sh

|

||||

```

|

||||

|

||||

- Install cargo and a nightly version of rustc:

|

||||

```

|

||||

pkg install rustc cargo rustc-nightly

|

||||

```

|

||||

|

||||

- Prepend the nightly rustc path to your `PATH` in order to use nightly (fish syntax):

|

||||

```

|

||||

set -U fish_user_paths $PREFIX/opt/rust-nightly/bin/ $fish_user_paths

|

||||

```

|

||||

|

||||

Now `rustc --version` should work and output a nightly version number.

|

||||

|

||||

### Install Git and Clone blog_os

|

||||

|

||||

We need something to compile, so let's download the `blog_os` repository:

|

||||

|

||||

- Install git:

|

||||

```

|

||||

pkg install git

|

||||

```

|

||||

|

||||

- Clone the `blog_os` repository:

|

||||

```

|

||||

git clone https://github.com/phil-opp/blog_os.git

|

||||

```

|

||||

|

||||

If you want to clone/push via SSH, you need to install the `openssh` package: `pkg install openssh`.

|

||||

|

||||

### Install Xbuild and Bootimage

|

||||

|

||||

Now we're ready to install `cargo xbuild` and `bootimage`

|

||||

|

||||

- Run `cargo install`:

|

||||

```

|

||||

cargo install cargo-xbuild bootimage

|

||||

```

|

||||

|

||||

- Add the cargo bin directory to your `PATH` (fish syntax):

|

||||

```

|

||||

set -U fish_user_paths ~/.cargo/bin/ $fish_user_paths

|

||||

```

|

||||

|

||||

Now `cargo xbuild` and `bootimage` should be available. It does not work yet because `cargo xbuild` needs access to the rust source code. By default it tries to use rustup for this, but we have no rustup support so we need a different way.

|

||||

|

||||

### Providing the Rust Source Code

|

||||

|

||||

The Rust source code corresponding to our installed nightly is available in the [`its-pointless` repository](https://github.com/its-pointless/its-pointless.github.io):

|

||||

|

||||

- Download a tar containing the source code:

|

||||

```

|

||||

wget https://github.com/its-pointless/its-pointless.github.io/raw/master/rust-src-nightly.tar.xz

|

||||

```

|

||||

|

||||

- Extract it:

|

||||

```

|

||||

tar xf rust-src-nightly.tar.xz

|

||||

```

|

||||

|

||||

- Set the `XARGO_RUST_SRC` environment variable to tell cargo-xbuild the source path (fish syntax):

|

||||

```

|

||||

set -Ux XARGO_RUST_SRC ~/rust-src-nightly/rust-src/lib/rustlib/src/rust/src

|

||||

```

|

||||

|

||||

Now cargo-xbuild should no longer complain about a missing `rust-src` component. However it will throw an I/O error after building the sysroot. The problem is that the downloaded Rust source code has a different structure than the source provided by rustup. We can fix this by adding a symbolic link:

|

||||

|

||||

```

|

||||

ln -s ~/../usr/opt/rust-nightly/bin ~/../usr/opt/rust-nightly/lib/rustlib/aarch64-linux-android/bin

|

||||

```

|

||||

|

||||

Now `cargo xbuild --target x86_64-blog_os.json` and `bootimage build` should both work!

|

||||

|

||||

I couldn't get QEMU to run yet, so you won't be able to run your kernel. If you manage to get it working, please tell me :).

|

||||

@@ -1,526 +0,0 @@

|

||||

+++

|

||||

title = " یک باینری مستقل Rust"

|

||||

weight = 1

|

||||

path = "fa/freestanding-rust-binary"

|

||||

date = 2018-02-10

|

||||

|

||||

[extra]

|

||||

chapter = "Bare Bones"

|

||||

# Please update this when updating the translation

|

||||

translation_based_on_commit = "80136cc0474ae8d2da04f391b5281cfcda068c1a"

|

||||

# GitHub usernames of the people that translated this post

|

||||

translators = ["hamidrezakp", "MHBahrampour"]

|

||||

rtl = true

|

||||

+++

|

||||

|

||||

اولین قدم برای نوشتن سیستمعامل، ساخت یک باینری راست (کلمه: Rust) هست که به کتابخانه استاندارد نیازمند نباشد. این باعث میشود تا بتوانیم کد راست را بدون سیستمعامل زیرین، بر روی سخت افزار [bare metal] اجرا کنیم.

|

||||

|

||||

[bare metal]: https://en.wikipedia.org/wiki/Bare_machine

|

||||

|

||||

<!-- more -->

|

||||

|

||||

این بلاگ بصورت آزاد بر روی [گیتهاب] توسعه داده شده. اگر مشکل یا سوالی دارید، لطفاً آنجا یک ایشو باز کنید. همچنین میتوانید [در زیر] این پست کامنت بگذارید. سورس کد کامل این پست را میتوانید در بِرَنچ [`post-01`][post branch] پیدا کنید.

|

||||

|

||||

[گیتهاب]: https://github.com/phil-opp/blog_os

|

||||

[در زیر]: #comments

|

||||

[post branch]: https://github.com/phil-opp/blog_os/tree/post-01

|

||||

|

||||

<!-- toc -->

|

||||

|

||||

## مقدمه

|

||||

برای نوشتن هسته سیستمعامل، ما به کدی نیاز داریم که به هیچ یک از ویژگیهای سیستمعامل نیازی نداشته باشد. یعنی نمیتوانیم از نخها (ترجمه: Threads)، فایلها، حافظه هیپ (کلمه: Heap)، شبکه، اعداد تصادفی، ورودی استاندارد، یا هر ویژگی دیگری که نیاز به انتزاعات سیستمعامل یا سختافزار خاصی داشته، استفاده کنیم. منطقی هم به نظر میرسد، چون ما سعی داریم سیستمعامل و درایورهای خودمان را بنویسیم.

|

||||

|

||||

نداشتن انتزاعات سیستمعامل به این معنی هست که نمیتوانیم از بخش زیادی از [کتابخانه استاندارد راست] استفاده کنیم، اما هنوز بسیاری از ویژگیهای راست هستند که میتوانیم از آنها استفاده کنیم. به عنوان مثال، میتوانیم از [iterator] ها، [closure] ها، [pattern matching]، [option]، [result]، [string formatting] و البته [سیستم ownership] استفاده کنیم. این ویژگیها به ما امکان نوشتن هسته به طور رسا، سطح بالا و بدون نگرانی درباره [رفتار تعریف نشده] و [امنیت حافظه] را میدهند.

|

||||

|

||||

[option]: https://doc.rust-lang.org/core/option/

|

||||

[result]:https://doc.rust-lang.org/core/result/

|

||||

[کتابخانه استاندارد راست]: https://doc.rust-lang.org/std/

|

||||

[iterators]: https://doc.rust-lang.org/book/ch13-02-iterators.html

|

||||

[closures]: https://doc.rust-lang.org/book/ch13-01-closures.html

|

||||

[pattern matching]: https://doc.rust-lang.org/book/ch06-00-enums.html

|

||||

[string formatting]: https://doc.rust-lang.org/core/macro.write.html

|

||||

[سیستم ownership]: https://doc.rust-lang.org/book/ch04-00-understanding-ownership.html

|

||||

[رفتار تعریف نشده]: https://www.nayuki.io/page/undefined-behavior-in-c-and-cplusplus-programs

|

||||

[امنیت حافظه]: https://tonyarcieri.com/it-s-time-for-a-memory-safety-intervention

|

||||

|

||||

برای ساختن یک هسته سیستمعامل به زبان راست، باید فایل اجراییای بسازیم که بتواند بدون سیستمعامل زیرین اجرا بشود. چنین فایل اجرایی، فایل اجرایی مستقل (ترجمه: freestanding) یا فایل اجرایی “bare-metal” نامیده میشود.

|

||||

|

||||

این پست قدمهای لازم برای ساخت یک باینری مستقل راست و اینکه چرا این قدمها نیاز هستند را توضیح میدهد. اگر علاقهایی به خواندن کل توضیحات ندارید، میتوانید **[به قسمت خلاصه مراجعه کنید](#summary)**.

|

||||

|

||||

## غیر فعال کردن کتابخانه استاندارد

|

||||

به طور پیشفرض تمام کِرِیتهای راست، از [کتابخانه استاندارد] استفاده میکنند(لینک به آن دارند)، که به سیستمعامل برای قابلیتهایی مثل نخها، فایلها یا شبکه وابستگی دارد. همچنین به کتابخانه استاندارد زبان سی، `libc` هم وابسطه هست که با سرویسهای سیستمعامل تعامل نزدیکی دارند. از آنجا که قصد داریم یک سیستمعامل بنویسیم، نمیتوانیم از هیچ کتابخانهایی که به سیستمعامل نیاز داشته باشد استفاده کنیم. بنابراین باید اضافه شدن خودکار کتابخانه استاندارد را از طریق [خاصیت `no_std`] غیر فعال کنیم.

|

||||

|

||||

[کتابخانه استاندارد]: https://doc.rust-lang.org/std/

|

||||

[خاصیت `no_std`]: https://doc.rust-lang.org/1.30.0/book/first-edition/using-rust-without-the-standard-library.html

|

||||

|

||||

|

||||

با ساخت یک اپلیکیشن جدید کارگو شروع میکنیم. سادهترین راه برای انجام این کار از طریق خط فرمان است:

|

||||

|

||||

```

|

||||

cargo new blog_os --bin --edition 2018

|

||||

```

|

||||

|

||||

نام پروژه را `blog_os` گذاشتم، اما شما میتوانید نام دلخواه خود را انتخاب کنید. پرچمِ (ترجمه: Flag) `bin--` مشخص میکند که ما میخواهیم یک فایل اجرایی ایجاد کنیم (به جای یک کتابخانه) و پرچمِ `edition 2018--` مشخص میکند که میخواهیم از [ویرایش 2018] زبان راست برای کریت خود استفاده کنیم. وقتی دستور را اجرا میکنیم، کارگو ساختار پوشههای زیر را برای ما ایجاد میکند:

|

||||

|

||||

[ویرایش 2018]: https://doc.rust-lang.org/nightly/edition-guide/rust-2018/index.html

|

||||

|

||||

```

|

||||

blog_os

|

||||

├── Cargo.toml

|

||||

└── src

|

||||

└── main.rs

|

||||

```

|

||||

|

||||

فایل `Cargo.toml` شامل تنظیمات کریت میباشد، به عنوان مثال نام کریت، نام نویسنده، شماره [نسخه سمنتیک] و وابستگیها. فایل `src/main.rs` شامل ماژول ریشه برای کریت ما و تابع `main` است. میتوانید کریت خود را با دستور `cargo build` کامپایل کنید و سپس باینری کامپایل شده `blog_os` را در زیرپوشه `target/debug` اجرا کنید.

|

||||

|

||||

[نسخه سمنتیک]: https://semver.org/

|

||||

|

||||

### خاصیت `no_std`

|

||||

|

||||

در حال حاظر کریت ما بطور ضمنی به کتابخانه استاندارد لینک دارد. بیایید تا سعی کنیم آن را با اضافه کردن [خاصیت `no_std`] غیر فعال کنیم:

|

||||

|

||||

```rust

|

||||

// main.rs

|

||||

|

||||

#![no_std]

|

||||

|

||||

fn main() {

|

||||

println!("Hello, world!");

|

||||

}

|

||||

```

|

||||

|

||||

حالا وقتی سعی میکنیم تا بیلد کنیم (با اجرای دستور `cargo build`)، خطای زیر رخ میدهد:

|

||||

|

||||

```

|

||||

error: cannot find macro `println!` in this scope

|

||||

--> src/main.rs:4:5

|

||||

|

|

||||

4 | println!("Hello, world!");

|

||||

| ^^^^^^^

|

||||

```

|

||||

|

||||

دلیل این خطا این هست که [ماکروی `println`]\(ترجمه: macro) جزوی از کتابخانه استاندارد است، که ما دیگر آن را نداریم. بنابراین نمیتوانیم چیزی را چاپ کنیم. منطقی هست زیرا `println` در [خروجی استاندارد] مینویسد، که یک توصیف کننده فایل (ترجمه: File Descriptor) خاص است که توسط سیستمعامل ارائه میشود.

|

||||

|

||||

[ماکروی `println`]: https://doc.rust-lang.org/std/macro.println.html

|

||||

[خروجی استاندارد]: https://en.wikipedia.org/wiki/Standard_streams#Standard_output_.28stdout.29

|

||||

|

||||

پس بیایید قسمت مروبط به چاپ را پاک کرده و این بار با یک تابع main خالی امتحان کنیم:

|

||||

|

||||

```rust

|

||||

// main.rs

|

||||

|

||||

#![no_std]

|

||||

|

||||

fn main() {}

|

||||

```

|

||||

|

||||

```

|

||||

> cargo build

|

||||

error: `#[panic_handler]` function required, but not found

|

||||

error: language item required, but not found: `eh_personality`

|

||||

```

|

||||

|

||||

حالا کامپایلر با کمبود یک تابع `#[panic_handler]` و یک _language item_ روبرو است.

|

||||

|

||||

## پیادهسازی پنیک (کلمه: Panic)

|

||||

|

||||

خاصیت `panic_handler` تابعی را تعریف میکند که کامپایلر باید در هنگام رخ دادن یک [پنیک] اجرا کند. کتابخانه استاندارد تابع مدیریت پنیک خود را ارائه میدهد، اما در یک محیط `no_std` ما باید خودمان آن را تعریف کنیم.

|

||||

|

||||

[پنیک]: https://doc.rust-lang.org/stable/book/ch09-01-unrecoverable-errors-with-panic.html

|

||||

|

||||

```rust

|

||||

// in main.rs

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

/// This function is called on panic.

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

[پارامتر `PanicInfo`][PanicInfo] شامل فایل و شماره خطی که پنیک رخ داده و پیام پنیکِ اختیاری میباشد. تابع هیچ وقت نباید چیزی را برگرداند به همین دلیل به عنوان یک [تابع واگرا]\(ترجمه: diverging function) بوسیله نوع برگشتی `!` [نوع ”هرگز“] علامتگذاری شده است. فعلا کار زیادی نیست که بتوانیم در این تابع انجام دهیم، بنابراین فقط یک حلقه بینهایت مینویسیم.

|

||||

|

||||

[PanicInfo]: https://doc.rust-lang.org/nightly/core/panic/struct.PanicInfo.html

|

||||

[تابع واگرا]: https://doc.rust-lang.org/1.30.0/book/first-edition/functions.html#diverging-functions

|

||||

[نوع ”هرگز“]: https://doc.rust-lang.org/nightly/std/primitive.never.html

|

||||

|

||||

## آیتم زبان `eh_personality`

|

||||

|

||||

آیتمهای زبان، توابع و انواع خاصی هستند که برای استفاده درون کامپایلر ضروریاند. به عنوان مثال، تِرِیت [`Copy`]\(کلمه: Trait) یک آیتم زبان است که به کامپایلر میگوید کدام انواع دارای [_مفهوم کپی_][`Copy`] هستند. وقتی به [پیادهسازی][copy code] آن نگاه میکنیم، میبینیم که یک خاصیت ویژه `#[lang = "copy"]` دارد که آن را به عنوان یک آیتم زبان تعریف میکند.

|

||||

|

||||

[`Copy`]: https://doc.rust-lang.org/nightly/core/marker/trait.Copy.html

|

||||

[copy code]: https://github.com/rust-lang/rust/blob/485397e49a02a3b7ff77c17e4a3f16c653925cb3/src/libcore/marker.rs#L296-L299

|

||||

|

||||

درحالی که میتوان پیادهسازی خاص برای آیتمهای زبان فراهم کرد، فقط باید به عنوان آخرین راه حل از آن استفاده کرد. زیرا آیتمهای زبان بسیار در جزئیات پیادهسازی ناپایدار هستند و حتی انواع آنها نیز چک نمیشود (بنابراین کامپایلر حتی چک نمیکند که آرگومان تابع نوع درست را دارد). خوشبختانه یک راه پایدارتر برای حل مشکل آیتم زبان بالا وجود دارد.

|

||||

|

||||

[آیتم زبان `eh_personality`] یک تابع را به عنوان تابعی که برای پیادهسازی [بازکردن پشته (Stack Unwinding)] استفاده شده، علامتگذاری میکند. راست بطور پیشفرض از _بازکردن_ (ترجمه: unwinding) برای اجرای نابودگرهای (ترجمه: Destructors) تمام متغیرهای زنده درون استک در مواقع [پنیک] استفاده میکند. این تضمین میکند که تمام حافظه استفاده شده آزاد میشود و به نخ اصلی اجازه میدهد پنیک را دریافت کرده و اجرا را ادامه دهد. باز کردن، یک فرآیند پیچیده است و به برخی از کتابخانههای خاص سیستمعامل (به عنوان مثال [libunwind] در لینوکس یا [مدیریت اکسپشن ساخت یافته] در ویندوز) نیاز دارد، بنابراین ما نمیخواهیم از آن برای سیستمعامل خود استفاده کنیم.

|

||||

|

||||

[آیتم زبان `eh_personality`]: https://github.com/rust-lang/rust/blob/edb368491551a77d77a48446d4ee88b35490c565/src/libpanic_unwind/gcc.rs#L11-L45

|

||||

[بازکردن پشته (Stack Unwinding)]: https://www.bogotobogo.com/cplusplus/stackunwinding.php

|

||||

[libunwind]: https://www.nongnu.org/libunwind/

|

||||

[مدیریت اکسپشن ساخت یافته]: https://docs.microsoft.com/de-de/windows/win32/debug/structured-exception-handling

|

||||

|

||||

### غیرفعال کردن Unwinding

|

||||

|

||||

موارد استفاده دیگری نیز وجود دارد که باز کردن نامطلوب است، بنابراین راست به جای آن گزینه [قطع در پنیک] را فراهم میکند. این امر تولید اطلاعات نمادها (ترجمه: Symbol) را از بین میبرد و بنابراین اندازه باینری را بطور قابل توجهی کاهش میدهد. چندین مکان وجود دارد که می توانیم باز کردن را غیرفعال کنیم. سادهترین راه این است که خطوط زیر را به `Cargo.toml` اضافه کنید:

|

||||

|

||||

```toml

|

||||

[profile.dev]

|

||||

panic = "abort"

|

||||

|

||||

[profile.release]

|

||||

panic = "abort"

|

||||

```

|

||||

|

||||

این استراتژی پنیک را برای دو پروفایل `dev` (در `cargo build` استفاده میشود) و پروفایل `release` (در ` cargo build --release` استفاده میشود) تنظیم میکند. اکنون آیتم زبان `eh_personality` نباید دیگر لازم باشد.

|

||||

|

||||

[قطع در پنیک]: https://github.com/rust-lang/rust/pull/32900

|

||||

|

||||

اکنون هر دو خطای فوق را برطرف کردیم. با این حال، اگر اکنون بخواهیم آن را کامپایل کنیم، خطای دیگری رخ میدهد:

|

||||

|

||||

```

|

||||

> cargo build

|

||||

error: requires `start` lang_item

|

||||

```

|

||||

|

||||

برنامه ما آیتم زبان `start` که نقطه ورود را مشخص میکند، را ندارد.

|

||||

|

||||

## خاصیت `start`

|

||||

|

||||

ممکن است تصور شود که تابع `main` اولین تابعی است که هنگام اجرای یک برنامه فراخوانی میشود. با این حال، بیشتر زبانها دارای [سیستم رانتایم] هستند که مسئول مواردی مانند جمع آوری زباله (به عنوان مثال در جاوا) یا نخهای نرمافزار (به عنوان مثال goroutines در Go) است. این رانتایم باید قبل از `main` فراخوانی شود، زیرا باید خود را مقداردهی اولیه و آماده کند.

|

||||

|

||||

[سیستم رانتایم]: https://en.wikipedia.org/wiki/Runtime_system

|

||||

|

||||

در یک باینری معمولی راست که از کتابخانه استاندارد استفاده میکند، اجرا در یک کتابخانه رانتایم C به نام `crt0` ("زمان اجرا صفر C") شروع میشود، که محیط را برای یک برنامه C تنظیم میکند. این شامل ایجاد یک پشته و قرار دادن آرگومانها در رجیسترهای مناسب است. سپس رانتایم C [ورودی رانتایم راست][rt::lang_start] را فراخوانی میکند، که با آیتم زبان `start` مشخص شده است. راست فقط یک رانتایم بسیار کوچک دارد، که مواظب برخی از کارهای کوچک مانند راهاندازی محافظهای سرریز پشته یا چاپ backtrace با پنیک میباشد. رانتایم در نهایت تابع `main` را فراخوانی میکند.

|

||||

|

||||

[rt::lang_start]: https://github.com/rust-lang/rust/blob/bb4d1491466d8239a7a5fd68bd605e3276e97afb/src/libstd/rt.rs#L32-L73

|

||||

|

||||

برنامه اجرایی مستقل ما به رانتایم و `crt0` دسترسی ندارد، بنابراین باید نقطه ورود را مشخص کنیم. پیادهسازی آیتم زبان `start` کمکی نخواهد کرد، زیرا همچنان به `crt0` نیاز دارد. در عوض، باید نقطه ورود `crt0` را مستقیماً بازنویسی کنیم.

|

||||

|

||||

### بازنویسی نقطه ورود

|

||||

|

||||

برای اینکه به کامپایلر راست بگوییم که نمیخواهیم از زنجیره نقطه ورودی عادی استفاده کنیم، ویژگی `#![no_main]` را اضافه میکنیم.

|

||||

|

||||

```rust

|

||||

#![no_std]

|

||||

#![no_main]

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

/// This function is called on panic.

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

ممکن است متوجه شده باشید که ما تابع `main` را حذف کردیم. دلیل این امر این است که `main` بدون یک رانتایم اساسی که آن را صدا کند معنی ندارد. در عوض، ما در حال بازنویسی نقطه ورود سیستمعامل با تابع `start_` خود هستیم:

|

||||

|

||||

```rust

|

||||

#[no_mangle]

|

||||

pub extern "C" fn _start() -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

با استفاده از ویژگی `[no_mangle]#` ما [name mangling] را غیرفعال می کنیم تا اطمینان حاصل کنیم که کامپایلر راست تابعی با نام `start_` را خروجی میدهد. بدون این ویژگی، کامپایلر برخی از نمادهای رمزنگاری شده `ZN3blog_os4_start7hb173fedf945531caE_` را تولید میکند تا به هر تابع یک نام منحصر به فرد بدهد. این ویژگی لازم است زیرا در مرحله بعدی باید نام تایع نقطه ورود را به لینکر (کلمه: linker) بگوییم.

|

||||

|

||||

ما همچنین باید تابع را به عنوان `"extern "C` علامتگذاری کنیم تا به کامپایلر بگوییم که باید از [قرارداد فراخوانی C] برای این تابع استفاده کند (به جای قرارداد مشخص نشده فراخوانی راست). دلیل نامگذاری تابع `start_` این است که این نام نقطه پیشفرض ورودی برای اکثر سیستمها است.

|

||||

|

||||

[name mangling]: https://en.wikipedia.org/wiki/Name_mangling

|

||||

[قرارداد فراخوانی C]: https://en.wikipedia.org/wiki/Calling_convention

|

||||

|

||||

نوع بازگشت `!` به این معنی است که تایع واگرا است، یعنی اجازه بازگشت ندارد. این مورد لازم است زیرا نقطه ورود توسط هیچ تابعی فراخوانی نمیشود، بلکه مستقیماً توسط سیستمعامل یا بوتلودر فراخوانی میشود. بنابراین به جای بازگشت، نقطه ورود باید به عنوان مثال [فراخوان سیستمی `exit`] از سیستمعامل را فراخوانی کند. در مورد ما، خاموش کردن دستگاه میتواند اقدامی منطقی باشد، زیرا در صورت بازگشت باینری مستقل دیگر کاری برای انجام دادن وجود ندارد. در حال حاضر، ما این نیاز را با حلقههای بیپایان انجام میدهیم.

|

||||

|

||||

[فراخوان سیستمی `exit`]: https://en.wikipedia.org/wiki/Exit_(system_call)

|

||||

|

||||

حالا وقتی `cargo build` را اجرا میکنیم، با یک خطای _لینکر_ زشت مواجه میشویم.

|

||||

|

||||

## خطاهای لینکر (Linker)

|

||||

|

||||

لینکر برنامهای است که کد تولید شده را ترکیب کرده و یک فایل اجرایی میسازد. از آنجا که فرمت اجرایی بین لینوکس، ویندوز و macOS متفاوت است، هر سیستم لینکر خود را دارد که خطای متفاوتی ایجاد میکند. علت اصلی خطاها یکسان است: پیکربندی پیشفرض لینکر فرض میکند که برنامه ما به رانتایم C وابسته است، که این طور نیست.

|

||||

|

||||

برای حل خطاها، باید به لینکر بگوییم که نباید رانتایم C را اضافه کند. ما میتوانیم این کار را با اضافه کردن مجموعهای از آرگمانها به لینکر یا با ساختن یک هدف (ترجمه: Target) bare metal انجام دهیم.

|

||||

|

||||

### بیلد کردن برای یک هدف bare metal

|

||||

|

||||

راست به طور پیشفرض سعی در ایجاد یک اجرایی دارد که بتواند در محیط سیستم فعلی شما اجرا شود. به عنوان مثال، اگر از ویندوز در `x86_64` استفاده میکنید، راست سعی در ایجاد یک `exe.` اجرایی ویندوز دارد که از دستورالعملهای `x86_64` استفاده میکند. به این محیط سیستم "میزبان" شما گفته میشود.

|

||||

|

||||

راست برای توصیف محیطهای مختلف، از رشتهای به نام [_target triple_]\(سهگانه هدف) استفاده میکند. با اجرای `rustc --version --verbose` میتوانید target triple را برای سیستم میزبان خود مشاهده کنید:

|

||||

|

||||

[_target triple_]: https://clang.llvm.org/docs/CrossCompilation.html#target-triple

|

||||

|

||||

```

|

||||

rustc 1.35.0-nightly (474e7a648 2019-04-07)

|

||||

binary: rustc

|

||||

commit-hash: 474e7a6486758ea6fc761893b1a49cd9076fb0ab

|

||||

commit-date: 2019-04-07

|

||||

host: x86_64-unknown-linux-gnu

|

||||

release: 1.35.0-nightly

|

||||

LLVM version: 8.0

|

||||

```

|

||||

|

||||

خروجی فوق از یک سیستم لینوکس `x86_64` است. میبینیم که سهگانه میزبان `x86_64-unknown-linux-gnu` است که شامل معماری پردازنده (`x86_64`)، فروشنده (`ناشناخته`)، سیستمعامل (` linux`) و [ABI] (`gnu`) است.

|

||||

|

||||

[ABI]: https://en.wikipedia.org/wiki/Application_binary_interface

|

||||

|

||||

با کامپایل کردن برای سهگانه میزبانمان، کامپایلر راست و لینکر فرض میکنند که یک سیستمعامل زیرین مانند Linux یا Windows وجود دارد که به طور پیشفرض از رانتایم C استفاده میکند، که باعث خطاهای لینکر میشود. بنابراین برای جلوگیری از خطاهای لینکر، میتوانیم برای محیطی متفاوت و بدون سیستمعامل زیرین کامپایل کنیم.

|

||||

|

||||

یک مثال برای چنین محیطِ bare metal ی، سهگانه هدف `thumbv7em-none-eabihf` است، که یک سیستم [تعبیه شده][ARM] را توصیف میکند. جزئیات مهم نیستند، مهم این است که سهگانه هدف فاقد سیستمعامل زیرین باشد، که با `none` در سهگانه هدف نشان داده میشود. برای این که بتوانیم برای این هدف کامپایل کنیم، باید آن را به rustup اضافه کنیم:

|

||||

|

||||

[تعبیه شده]: https://en.wikipedia.org/wiki/Embedded_system

|

||||

[ARM]: https://en.wikipedia.org/wiki/ARM_architecture

|

||||

|

||||

```

|

||||

rustup target add thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

با این کار نسخهای از کتابخانه استاندارد (و core) برای سیستم بارگیری میشود. اکنون میتوانیم برای این هدف اجرایی مستقل خود را بسازیم:

|

||||

|

||||

```

|

||||

cargo build --target thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

با استفاده از یک آرگومان `target--`، ما اجرایی خود را برای یک سیستم هدف bare metal [کراس کامپایل] میکنیم. از آنجا که سیستم هدف فاقد سیستمعامل است، لینکر سعی نمیکند رانتایم C را به آن پیوند دهد و بیلد ما بدون هیچ گونه خطای لینکر با موفقیت انجام میشود.

|

||||

|

||||

[کراس کامپایل]: https://en.wikipedia.org/wiki/Cross_compiler

|

||||

|

||||

این روشی است که ما برای ساخت هسته سیستمعامل خود استفاده خواهیم کرد. به جای `thumbv7em-none-eabihf`، ما از یک [هدف سفارشی] استفاده خواهیم کرد که یک محیط `x86_64` bare metal را توصیف میکند. جزئیات در پست بعدی توضیح داده خواهد شد.

|

||||

|

||||

[هدف سفارشی]: https://doc.rust-lang.org/rustc/targets/custom.html

|

||||

|

||||

### آرگومانهای لینکر

|

||||

|

||||

به جای کامپایل کردن برای یک سیستم bare metal، میتوان خطاهای لینکر را با استفاده از مجموعه خاصی از آرگومانها به لینکر حل کرد. این روشی نیست که ما برای هسته خود استفاده کنیم، بنابراین این بخش اختیاری است و فقط برای کامل بودن ارائه میشود. برای نشان دادن محتوای اختیاری، روی _"آرگومانهای لینکر"_ در زیر کلیک کنید.

|

||||

|

||||

<details>

|

||||

|

||||

<summary>آرگومانهای لینکر</summary>

|

||||

|

||||

در این بخش، ما در مورد خطاهای لینکر که در لینوکس، ویندوز و macOS رخ میدهد بحث میکنیم و نحوه حل آنها را با استفاده از آرگومانهای اضافی به لینکر توضیح میدهیم. توجه داشته باشید که فرمت اجرایی و لینکر بین سیستمعاملها متفاوت است، بنابراین برای هر سیستم مجموعهای متفاوت از آرگومانها مورد نیاز است.

|

||||

|

||||

#### لینوکس

|

||||

|

||||

در لینوکس خطای لینکر زیر رخ میدهد (کوتاه شده):

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: /usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x12): undefined reference to `__libc_csu_fini'

|

||||

/usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x19): undefined reference to `__libc_csu_init'

|

||||

/usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x25): undefined reference to `__libc_start_main'

|

||||

collect2: error: ld returned 1 exit status

|

||||

```

|

||||

|

||||

مشکل این است که لینکر به طور پیشفرض شامل روال راهاندازی رانتایم C است که به آن `start_` نیز گفته میشود. به برخی از نمادهای کتابخانه استاندارد C یعنی `libc` نیاز دارد که به دلیل ویژگی`no_std` آنها را نداریم، بنابراین لینکر نمیتواند این مراجع را پیدا کند. برای حل این مسئله، با استفاده از پرچم `nostartfiles-` میتوانیم به لینکر بگوییم که نباید روال راهاندازی C را لینک دهد.

|

||||

|

||||

یکی از راههای عبور صفات لینکر از طریق cargo، دستور `cargo rustc` است. این دستور دقیقاً مانند `cargo build` رفتار میکند، اما اجازه میدهد گزینهها را به `rustc`، کامپایلر اصلی راست انتقال دهید. `rustc` دارای پرچم`C link-arg-` است که آرگومان را به لینکر منتقل میکند. با ترکیب همه اینها، دستور بیلد جدید ما به این شکل است:

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-arg=-nostartfiles

|

||||

```

|

||||

|

||||

اکنون کریت ما بصورت اجرایی مستقل در لینوکس ساخته میشود!

|

||||

|

||||

لازم نیست که صریحاً نام تابع نقطه ورود را مشخص کنیم، زیرا لینکر به طور پیشفرض به دنبال تابعی با نام `start_` میگردد.

|

||||

|

||||

#### ویندوز

|

||||

|

||||

در ویندوز، یک خطای لینکر متفاوت رخ میدهد (کوتاه شده):

|

||||

|

||||

```

|

||||

error: linking with `link.exe` failed: exit code: 1561

|

||||

|

|

||||

= note: "C:\\Program Files (x86)\\…\\link.exe" […]

|

||||

= note: LINK : fatal error LNK1561: entry point must be defined

|

||||

```

|

||||

|

||||

خطای "entry point must be defined" به این معنی است که لینکر نمیتواند نقطه ورود را پیدا کند. در ویندوز، نام پیشفرض نقطه ورود [بستگی به زیر سیستم استفاده شده دارد] [windows-subsystem]. برای زیر سیستم `CONSOLE` لینکر به دنبال تابعی به نام `mainCRTStartup` و برای زیر سیستم `WINDOWS` به دنبال تابعی به نام `WinMainCRTStartup` میگردد. برای بازنویسی این پیشفرض و به لینکر گفتن که در عوض به دنبال تابع `_start` ما باشد ، می توانیم یک آرگومان `ENTRY/` را به لینکر ارسال کنیم:

|

||||

|

||||

[windows-subsystems]: https://docs.microsoft.com/en-us/cpp/build/reference/entry-entry-point-symbol

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-arg=/ENTRY:_start

|

||||

```

|

||||

|

||||

از متفاوت بودن فرمت آرگومان، به وضوح میفهمیم که لینکر ویندوز یک برنامه کاملاً متفاوت از لینکر Linux است.

|

||||

|

||||

اکنون یک خطای لینکر متفاوت رخ داده است:

|

||||

|

||||

```

|

||||

error: linking with `link.exe` failed: exit code: 1221

|

||||

|

|

||||

= note: "C:\\Program Files (x86)\\…\\link.exe" […]

|

||||

= note: LINK : fatal error LNK1221: a subsystem can't be inferred and must be

|

||||

defined

|

||||

```

|

||||

|

||||

این خطا به این دلیل رخ میدهد که برنامههای اجرایی ویندوز میتوانند از [زیر سیستم های][windows-subsystems] مختلف استفاده کنند. برای برنامههای عادی، بسته به نام نقطه ورود استنباط می شوند: اگر نقطه ورود `main` نامگذاری شود، از زیر سیستم `CONSOLE` و اگر نقطه ورود `WinMain` نامگذاری شود، از زیر سیستم `WINDOWS` استفاده میشود. از آنجا که تابع `start_` ما نام دیگری دارد، باید زیر سیستم را صریحاً مشخص کنیم:

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-args="/ENTRY:_start /SUBSYSTEM:console"

|

||||

```

|

||||

|

||||

ما در اینجا از زیر سیستم `CONSOLE` استفاده میکنیم، اما زیر سیستم `WINDOWS` نیز کار خواهد کرد. به جای اینکه چند بار از `C link-arg-` استفاده کنیم، از`C link-args-` استفاده میکنیم که لیستی از آرگومانها به صورت جدا شده با فاصله را دریافت میکند.

|

||||

|

||||

با استفاده از این دستور، اجرایی ما باید با موفقیت بر روی ویندوز ساخته شود.

|

||||

|

||||

#### macOS

|

||||

|

||||

در macOS، خطای لینکر زیر رخ میدهد (کوتاه شده):

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: entry point (_main) undefined. for architecture x86_64

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

این پیام خطا به ما میگوید که لینکر نمیتواند یک تابع نقطه ورود را با نام پیشفرض `main` پیدا کند (به دلایلی همه توابع در macOS دارای پیشوند `_` هستند). برای تنظیم نقطه ورود به تابع `start_` ، آرگومان لینکر `e-` را استفاده میکنیم:

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-args="-e __start"

|

||||

```

|

||||

|

||||

پرچم `e-` نام تابع نقطه ورود را مشخص میکند. از آنجا که همه توابع در macOS دارای یک پیشوند اضافی `_` هستند، ما باید به جای `start_` نقطه ورود را روی `start__` تنظیم کنیم.

|

||||

|

||||

اکنون خطای لینکر زیر رخ میدهد:

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: dynamic main executables must link with libSystem.dylib

|

||||

for architecture x86_64

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

سیستمعامل مک [رسماً باینریهایی را که بطور استاتیک با هم پیوند دارند پشتیبانی نمیکند] و بطور پیشفرض به برنامههایی برای پیوند دادن کتابخانه `libSystem` نیاز دارد. برای تغییر این حالت و پیوند دادن یک باینری استاتیک، پرچم `static-` را به لینکر ارسال میکنیم:

|

||||

|

||||

[باینریهایی را که بطور استاتیک با هم پیوند دارند پشتیبانی نمیکند]: https://developer.apple.com/library/archive/qa/qa1118/_index.html

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-args="-e __start -static"

|

||||

```

|

||||

|

||||

این نیز کافی نیست، سومین خطای لینکر رخ میدهد:

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: library not found for -lcrt0.o

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

این خطا رخ میدهد زیرا برنامه های موجود در macOS به طور پیشفرض به `crt0` ("رانتایم صفر C") پیوند دارند. این همان خطایی است که در لینوکس داشتیم و با افزودن آرگومان لینکر `nostartfiles-` نیز قابل حل است:

|

||||

|

||||

```

|

||||

cargo rustc -- -C link-args="-e __start -static -nostartfiles"

|

||||

```

|

||||

|

||||

اکنون برنامه ما باید با موفقیت بر روی macOS ساخته شود.

|

||||

|

||||

#### متحد کردن دستورات Build

|

||||

|

||||

در حال حاضر بسته به سیستمعامل میزبان، دستورات ساخت متفاوتی داریم که ایده آل نیست. برای جلوگیری از این، میتوانیم فایلی با نام `cargo/config.toml.` ایجاد کنیم که حاوی آرگومانهای خاص هر پلتفرم است:

|

||||

|

||||

```toml

|

||||

# in .cargo/config.toml

|

||||

|

||||

[target.'cfg(target_os = "linux")']

|

||||

rustflags = ["-C", "link-arg=-nostartfiles"]

|

||||

|

||||

[target.'cfg(target_os = "windows")']

|

||||

rustflags = ["-C", "link-args=/ENTRY:_start /SUBSYSTEM:console"]

|

||||

|

||||

[target.'cfg(target_os = "macos")']

|

||||

rustflags = ["-C", "link-args=-e __start -static -nostartfiles"]

|

||||

```

|

||||

|

||||

کلید `rustflags` شامل آرگومانهایی است که بطور خودکار به هر فراخوانی `rustc` اضافه میشوند. برای کسب اطلاعات بیشتر در مورد فایل `cargo/config.toml.` به [اسناد رسمی](https://doc.rust-lang.org/cargo/reference/config.html) مراجعه کنید.

|

||||

|

||||

اکنون برنامه ما باید در هر سه سیستمعامل با یک `cargo build` ساده قابل بیلد باشد.

|

||||

|

||||

#### آیا شما باید این کار را انجام دهید؟

|

||||

|

||||

اگرچه ساخت یک اجرایی مستقل برای لینوکس، ویندوز و macOS امکان پذیر است، اما احتمالاً ایده خوبی نیست. چرا که اجرایی ما هنوز انتظار موارد مختلفی را دارد، به عنوان مثال با فراخوانی تابع `start_` یک پشته مقداردهی اولیه شده است. بدون رانتایم C، ممکن است برخی از این الزامات برآورده نشود، که ممکن است باعث شکست برنامه ما شود، به عنوان مثال از طریق `segmentation fault`.

|

||||

|

||||

اگر می خواهید یک باینری کوچک ایجاد کنید که بر روی سیستمعامل موجود اجرا شود، اضافه کردن `libc` و تنظیم ویژگی `[start]#` همانطور که [اینجا](https://doc.rust-lang.org/1.16.0/book/no-stdlib.html) شرح داده شده است، احتمالاً ایده بهتری است.

|

||||

|

||||

</details>

|

||||

|

||||

## خلاصه

|

||||

|

||||

یک باینری مستقل مینیمال راست مانند این است:

|

||||

|

||||

`src/main.rs`:

|

||||

|

||||

```rust

|

||||

#![no_std] // don't link the Rust standard library

|

||||

#![no_main] // disable all Rust-level entry points

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

#[no_mangle] // don't mangle the name of this function

|

||||

pub extern "C" fn _start() -> ! {

|

||||

// this function is the entry point, since the linker looks for a function

|

||||

// named `_start` by default

|

||||

loop {}

|

||||

}

|

||||

|

||||

/// This function is called on panic.

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

`Cargo.toml`:

|

||||

|

||||

```toml

|

||||

[package]

|

||||

name = "crate_name"

|

||||

version = "0.1.0"

|

||||

authors = ["Author Name <author@example.com>"]

|

||||

|

||||

# the profile used for `cargo build`

|

||||

[profile.dev]

|

||||

panic = "abort" # disable stack unwinding on panic

|

||||

|

||||

# the profile used for `cargo build --release`

|

||||

[profile.release]

|

||||

panic = "abort" # disable stack unwinding on panic

|

||||

```

|

||||

|

||||

برای ساخت این باینری، ما باید برای یک هدف bare metal مانند `thumbv7em-none-eabihf` کامپایل کنیم:

|

||||

|

||||

```

|

||||

cargo build --target thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

یک راه دیگر این است که میتوانیم آن را برای سیستم میزبان با استفاده از آرگومانهای اضافی لینکر کامپایل کنیم:

|

||||

|

||||

```bash

|

||||

# Linux

|

||||

cargo rustc -- -C link-arg=-nostartfiles

|

||||

# Windows

|

||||

cargo rustc -- -C link-args="/ENTRY:_start /SUBSYSTEM:console"

|

||||

# macOS

|

||||

cargo rustc -- -C link-args="-e __start -static -nostartfiles"

|

||||

```

|

||||

|

||||

توجه داشته باشید که این فقط یک نمونه حداقلی از باینری مستقل راست است. این باینری انتظار چیزهای مختلفی را دارد، به عنوان مثال با فراخوانی تابع `start_` یک پشته مقداردهی اولیه میشود. **بنابراین برای هر گونه استفاده واقعی از چنین باینری، مراحل بیشتری لازم است**.

|

||||

|

||||

## بعدی چیست؟

|

||||

|

||||

[پست بعدی] مراحل مورد نیاز برای تبدیل باینری مستقل به حداقل هسته سیستمعامل را توضیح میدهد. که شامل ایجاد یک هدف سفارشی، ترکیب اجرایی ما با بوتلودر و یادگیری نحوه چاپ چیزی در صفحه است.

|

||||

|

||||

[پست بعدی]: @/second-edition/posts/02-minimal-rust-kernel/index.fa.md

|

||||

@@ -1,531 +0,0 @@

|

||||

+++

|

||||

title = "フリースタンディングな Rust バイナリ"

|

||||

weight = 1

|

||||

path = "ja/freestanding-rust-binary"

|

||||

date = 2018-02-10

|

||||

|

||||

[extra]

|

||||

chapter = "Bare Bones"

|

||||

# Please update this when updating the translation

|

||||

translation_based_on_commit = "6f1f87215892c2be12c6973a6f753c9a25c34b7e"

|

||||

# GitHub usernames of the people that translated this post

|

||||

translators = ["JohnTitor"]

|

||||

+++

|

||||

|

||||

私達自身のオペレーティングシステム(以下、OS)カーネルを作っていく最初のステップは標準ライブラリとリンクしない Rust の実行可能ファイルをつくることです。これにより、基盤となる OS がない[ベアメタル][bare metal]上で Rust のコードを実行することができるようになります。

|

||||

|

||||

[bare metal]: https://en.wikipedia.org/wiki/Bare_machine

|

||||

|

||||

<!-- more -->

|

||||

|

||||

このブログの内容は [GitHub] 上で公開・開発されています。何か問題や質問などがあれば issue をたててください (訳注: リンクは原文(英語)のものになります)。また[こちら][comments]にコメントを残すこともできます。この記事の完全なソースコードは[`post-01` ブランチ][post branch]にあります。

|

||||

|

||||

[GitHub]: https://github.com/phil-opp/blog_os

|

||||

[comments]: #comments

|

||||

[post branch]: https://github.com/phil-opp/blog_os/tree/post-01

|

||||

|

||||

<!-- toc -->

|

||||

|

||||

## 導入

|

||||

|

||||

OS カーネルを書くためには、いかなる OS の機能にも依存しないコードが必要となります。つまり、スレッドやヒープメモリ、ネットワーク、乱数、標準出力、その他 OS による抽象化や特定のハードウェアを必要とする機能は使えません。私達は自分自身で OS とそのドライバを書こうとしているので、これは理にかなっています。

|

||||

|

||||

これは [Rust の標準ライブラリ][Rust standard library]をほとんど使えないということを意味しますが、それでも私達が使うことのできる Rust の機能はたくさんあります。例えば、[イテレータ][iterators]や[クロージャ][closures]、[パターンマッチング][pattern matching]、 [`Option`][option] や [`Result`][result] 型に[文字列フォーマット][string formatting]、そしてもちろん[所有権システム][ownership system]を使うことができます。これらの機能により、[未定義動作][undefined behavior]や[メモリ安全性][memory safety]を気にせずに、高い水準で表現力豊かにカーネルを書くことができます。

|

||||

|

||||

[option]: https://doc.rust-lang.org/core/option/

|

||||

[result]:https://doc.rust-lang.org/core/result/

|

||||

[Rust standard library]: https://doc.rust-lang.org/std/

|

||||

[iterators]: https://doc.rust-lang.org/book/ch13-02-iterators.html

|

||||

[closures]: https://doc.rust-lang.org/book/ch13-01-closures.html

|

||||

[pattern matching]: https://doc.rust-lang.org/book/ch06-00-enums.html

|

||||

[string formatting]: https://doc.rust-lang.org/core/macro.write.html

|

||||

[ownership system]: https://doc.rust-lang.org/book/ch04-00-understanding-ownership.html

|

||||

[undefined behavior]: https://www.nayuki.io/page/undefined-behavior-in-c-and-cplusplus-programs

|

||||

[memory safety]: https://tonyarcieri.com/it-s-time-for-a-memory-safety-intervention

|

||||

|

||||

Rust で OS カーネルを書くには、基盤となる OS なしで動く実行環境をつくる必要があります。そのような実行環境はフリースタンディング環境やベアメタルのように呼ばれます。

|

||||

|

||||

この記事では、フリースタンディングな Rust のバイナリをつくるために必要なステップを紹介し、なぜそれが必要なのかを説明します。もし最小限の説明だけを読みたいのであれば **[概要](#概要)** まで飛ばしてください。

|

||||

|

||||

## 標準ライブラリの無効化

|

||||

|

||||

デフォルトでは、全ての Rust クレートは[標準ライブラリ][standard library]にリンクされています。標準ライブラリはスレッドやファイル、ネットワークのような OS の機能に依存しています。また OS と密接な関係にある C の標準ライブラリ(`libc`)にも依存しています。私達の目的は OS を書くことなので、 OS 依存のライブラリを使うことはできません。そのため、 [`no_std` attribute] を使って標準ライブラリが自動的にリンクされるのを無効にします。

|

||||

|

||||

[standard library]: https://doc.rust-lang.org/std/

|

||||

[`no_std` attribute]: https://doc.rust-lang.org/1.30.0/book/first-edition/using-rust-without-the-standard-library.html

|

||||

|

||||

新しい Cargo プロジェクトをつくるところから始めましょう。もっとも簡単なやり方はコマンドラインで以下を実行することです。

|

||||

|

||||

```bash

|

||||

cargo new blog_os --bin --edition 2018

|

||||

```

|

||||

|

||||

プロジェクト名を `blog_os` としましたが、もちろんお好きな名前をつけていただいても大丈夫です。`--bin`フラグは実行可能バイナリを作成することを、 `--edition 2018` は[2018エディション][2018 edition]を使用することを明示的に指定します。コマンドを実行すると、 Cargoは以下のようなディレクトリ構造を作成します:

|

||||

|

||||

[2018 edition]: https://doc.rust-lang.org/nightly/edition-guide/rust-2018/index.html

|

||||

|

||||

```bash

|

||||

blog_os

|

||||

├── Cargo.toml

|

||||

└── src

|

||||

└── main.rs

|

||||

```

|

||||

|

||||

`Cargo.toml` にはクレートの名前や作者名、[セマンティックバージョニング][semantic version]に基づくバージョンナンバーや依存関係などが書かれています。`src/main.rs` には私達のクレートのルートモジュールと `main` 関数が含まれています。`cargo build` コマンドでこのクレートをコンパイルして、 `target/debug` ディレクトリの中にあるコンパイルされた `blog_os` バイナリを実行することができます。

|

||||

|

||||

[semantic version]: https://semver.org/

|

||||

|

||||

### `no_std` Attribute

|

||||

|

||||

今のところ私達のクレートは暗黙のうちに標準ライブラリをリンクしています。[`no_std` attribute]を追加してこれを無効にしてみましょう:

|

||||

|

||||

```rust

|

||||

// main.rs

|

||||

|

||||

#![no_std]

|

||||

|

||||

fn main() {

|

||||

println!("Hello, world!");

|

||||

}

|

||||

```

|

||||

|

||||

(`cargo build` を実行して)ビルドしようとすると、次のようなエラーが発生します:

|

||||

|

||||

```bash

|

||||

error: cannot find macro `println!` in this scope

|

||||

--> src/main.rs:4:5

|

||||

|

|

||||

4 | println!("Hello, world!");

|

||||

| ^^^^^^^

|

||||

```

|

||||

|

||||

これは [`println` マクロ][`println` macro]が標準ライブラリに含まれているためです。`no_std` で標準ライブラリを無効にしたので、何かをプリントすることはできなくなりました。`println` は標準出力に書き込むのでこれは理にかなっています。[標準出力][standard output]は OS によって提供される特別なファイル記述子であるためです。

|

||||

|

||||

[`println` macro]: https://doc.rust-lang.org/std/macro.println.html

|

||||

[standard output]: https://en.wikipedia.org/wiki/Standard_streams#Standard_output_.28stdout.29

|

||||

|

||||

では、 `println` を削除し `main` 関数を空にしてもう一度ビルドしてみましょう:

|

||||

|

||||

```rust

|

||||

// main.rs

|

||||

|

||||

#![no_std]

|

||||

|

||||

fn main() {}

|

||||

```

|

||||

|

||||

```bash

|

||||

> cargo build

|

||||

error: `#[panic_handler]` function required, but not found

|

||||

error: language item required, but not found: `eh_personality`

|

||||

```

|

||||

|

||||

この状態では `#[panic_handler]` 関数と `language item` がないというエラーが発生します。

|

||||

|

||||

## Panic の実装

|

||||

|

||||

`panic_handler` attribute は[パニック]が発生したときにコンパイラが呼び出す関数を定義します。標準ライブラリには独自のパニックハンドラー関数がありますが、 `no_std` 環境では私達の手でそれを実装する必要があります:

|

||||

|

||||

[パニック]: https://doc.rust-lang.org/stable/book/ch09-01-unrecoverable-errors-with-panic.html

|

||||

|

||||

```rust

|

||||

// in main.rs

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

/// この関数はパニック時に呼ばれる

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

[`PanicInfo` パラメータ]には、パニックが発生したファイルと行、およびオプションでパニックメッセージが含まれます。この関数は戻り値を取るべきではないので、]"never" 型(`!`)][“never” type]を返すことで[発散する関数][diverging function]となります。今のところこの関数でできることは多くないので、無限にループするだけです。

|

||||

|

||||

[`PanicInfo` パラメータ]: https://doc.rust-lang.org/nightly/core/panic/struct.PanicInfo.html

|

||||

[diverging function]: https://doc.rust-lang.org/1.30.0/book/first-edition/functions.html#diverging-functions

|

||||

[“never” type]: https://doc.rust-lang.org/nightly/std/primitive.never.html

|

||||

|

||||

## `eh_personality` Language Item

|

||||

|

||||

language item はコンパイラによって内部的に必要とされる特別な関数や型です。例えば、[`Copy`] トレイトはどの型が[コピーセマンティクス][`Copy`]を持っているかをコンパイラに伝える language item です。[実装][copy code]を見てみると、 language item として定義されている特別な `#[lang = "copy"]` attribute を持っていることが分かります。

|

||||

|

||||

[`Copy`]: https://doc.rust-lang.org/nightly/core/marker/trait.Copy.html

|

||||

[copy code]: https://github.com/rust-lang/rust/blob/485397e49a02a3b7ff77c17e4a3f16c653925cb3/src/libcore/marker.rs#L296-L299

|

||||

|

||||

独自に language item を実装することもできますが、これは最終手段として行われるべきでしょう。というのも、language item は非常に不安定な実装であり型検査も行われないからです(なので、コンパイラは関数が正しい引数の型を取っているかさえ検査しません)。幸い、上記の language item のエラーを修正するためのより安定した方法があります。

|

||||

|

||||

[`eh_personality` language item] は[スタックアンワインド][stack unwinding] を実装するための関数を定義します。デフォルトでは、パニックが起きた場合には Rust はアンワインドを使用してすべてのスタックにある変数のデストラクタを実行します。これにより、使用されている全てのメモリが確実に解放され、親スレッドはパニックを検知して実行を継続できます。しかしアンワインドは複雑であり、いくつかの OS 特有のライブラリ(例えば、Linux では [libunwind] 、Windows では[構造化例外][structured exception handling])を必要とするので、私達の OS には使いたくありません。

|

||||

|

||||

[`eh_personality` language item]: https://github.com/rust-lang/rust/blob/edb368491551a77d77a48446d4ee88b35490c565/src/libpanic_unwind/gcc.rs#L11-L45

|

||||

[stack unwinding]: https://www.bogotobogo.com/cplusplus/stackunwinding.php

|

||||

[libunwind]: https://www.nongnu.org/libunwind/

|

||||

[structured exception handling]: https://docs.microsoft.com/de-de/windows/win32/debug/structured-exception-handling

|

||||

|

||||

### アンワインドの無効化

|

||||

|

||||

他にもアンワインドが望ましくないユースケースがあります。そのため、Rust には代わりに[パニックで中止する][abort on panic]オプションがあります。これにより、アンワインドのシンボル情報の生成が無効になり、バイナリサイズが大幅に削減されます。アンワインドを無効にする方法は複数あります。もっとも簡単な方法は、`Cargo.toml` に次の行を追加することです:

|

||||

|

||||

```toml

|

||||

[profile.dev]

|

||||

panic = "abort"

|

||||

|

||||

[profile.release]

|

||||

panic = "abort"

|

||||

```

|

||||

|

||||

これは dev プロファイル(`cargo build` に使用される)と release プロファイル(`cargo build --release` に使用される)の両方でパニックで中止するようにするための設定です。これで `eh_personality` language item が不要になりました。

|

||||

|

||||

[abort on panic]: https://github.com/rust-lang/rust/pull/32900

|

||||

|

||||

これで上の2つのエラーを修正しました。しかし、コンパイルしようとすると別のエラーが発生します:

|

||||

|

||||

```bash

|

||||

> cargo build

|

||||

error: requires `start` lang_item

|

||||

```

|

||||

|

||||

私達のプログラムにはエントリポイントを定義する `start` language item がありません。

|

||||

|

||||

## `start` attribute

|

||||

|

||||

`main` 関数はプログラムを実行したときに最初に呼び出される関数であると思うかもしれません。しかし、ほとんどの言語には[ランタイムシステム][runtime system]があり、これはガベージコレクション(Java など)やソフトウェアスレッド(Go のゴルーチン)などを処理します。ランタイムは自身を初期化する必要があるため、`main` 関数の前に呼び出す必要があります。これにはスタック領域の作成と正しいレジスタへの引数の配置が含まれます。

|

||||

|

||||

[runtime system]: https://en.wikipedia.org/wiki/Runtime_system

|

||||

|

||||

標準ライブラリをリンクする一般的な Rust バイナリでは、`crt0` ("C runtime zero")と呼ばれる C のランタイムライブラリで実行が開始され、C アプリケーションの環境が設定されます。その後 C ランタイムは、`start` language item で定義されている [Rust ランタイムのエントリポイント][rt::lang_start]を呼び出します。Rust にはごくわずかなランタイムしかありません。これは、スタックオーバーフローを防ぐ設定やパニック時のバックトレースの表示など、いくつかの小さな処理を行います。最後に、ランタイムは `main` 関数を呼び出します。

|

||||

|

||||

[rt::lang_start]: https://github.com/rust-lang/rust/blob/bb4d1491466d8239a7a5fd68bd605e3276e97afb/src/libstd/rt.rs#L32-L73

|

||||

|

||||

私達のフリースタンディングな実行可能ファイルは今のところ Rust ランタイムと `crt0` へアクセスできません。なので、私達は自身でエントリポイントを定義する必要があります。`start` language item を実装することは `crt0` を必要とするのでここではできません。代わりに `crt0` エントリポイントを直接上書きしなければなりません。

|

||||

|

||||

### エントリポイントの上書き

|

||||

|

||||

Rust コンパイラに通常のエントリポイントを使いたくないことを伝えるために、`#![no_main]` attribute を追加します。

|

||||

|

||||

```rust

|

||||

#![no_std]

|

||||

#![no_main]

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

/// This function is called on panic.

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

`main` 関数を削除したことに気付いたかもしれません。`main` 関数を呼び出す基盤となるランタイムなしには置いていても意味がないからです。代わりに、OS のエントリポイントを独自の `_start` 関数で上書きしていきます:

|

||||

|

||||

```rust

|

||||

#[no_mangle]

|

||||

pub extern "C" fn _start() -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

Rust コンパイラが `_start` という名前の関数を実際に出力するように、`#[no_mangle]` attributeを用いて[名前修飾][name mangling]を無効にします。この attribute がないと、コンパイラはすべての関数にユニークな名前をつけるために、 `_ZN3blog_os4_start7hb173fedf945531caE` のようなシンボルを生成します。次のステップでエントリポイントとなる関数の名前をリンカに伝えるため、この属性が必要となります。

|

||||

|

||||

また、(指定されていない Rust の呼び出し規約の代わりに)この関数に [C の呼び出し規約][C calling convention]を使用するようコンパイラに伝えるために、関数を `extern "C"` として定義する必要があります。`_start`という名前をつける理由は、これがほとんどのシステムのデフォルトのエントリポイント名だからです。

|

||||

|

||||

[name mangling]: https://en.wikipedia.org/wiki/Name_mangling

|

||||

[C calling convention]: https://en.wikipedia.org/wiki/Calling_convention

|

||||

|

||||

戻り値の型である `!` は関数が発散している、つまり値を返すことができないことを意味しています。エントリポイントはどの関数からも呼び出されず、OS またはブートローダから直接呼び出されるので、これは必須です。なので、値を返す代わりに、エントリポイントは例えば OS の [`exit` システムコール][`exit` system call]を呼び出します。今回はフリースタンディングなバイナリが返されたときマシンをシャットダウンするようにすると良いでしょう。今のところ、私達は無限ループを起こすことで要件を満たします。

|

||||

|

||||

[`exit` system call]: https://en.wikipedia.org/wiki/Exit_(system_call)

|

||||

|

||||

`cargo build` を実行すると、見づらいリンカエラーが発生します。

|

||||

|

||||

## リンカエラー

|

||||

|

||||

リンカは、生成されたコードを実行可能ファイルに紐付けるプログラムです。実行可能ファイルの形式は Linux や Windows、macOS でそれぞれ異なるため、各システムにはそれぞれ異なるエラーを発生させる独自のリンカがあります。エラーの根本的な原因は同じです。リンカのデフォルト設定では、プログラムが C ランタイムに依存していると仮定していますが、実際にはしていません。

|

||||

|

||||

エラーを回避するためにはリンカに C ランタイムに依存しないことを伝える必要があります。これはリンカに一連の引数を渡すか、ベアメタルターゲット用にビルドすることで可能となります。

|

||||

|

||||

### ベアメタルターゲット用にビルドする

|

||||

|

||||

デフォルトでは、Rust は現在のシステム環境に合った実行可能ファイルをビルドしようとします。例えば、`x86_64` で Windows を使用している場合、Rust は `x86_64` 用の `.exe` Windows 実行可能ファイルをビルドしようとします。このような環境は「ホスト」システムと呼ばれます。

|

||||

|

||||

様々な環境を表現するために、Rust は [_target triple_] という文字列を使います。`rustc --version --verbose` を実行すると、ホストシステムの target triple を確認できます:

|

||||

|

||||

[_target triple_]: https://clang.llvm.org/docs/CrossCompilation.html#target-triple

|

||||

|

||||

```bash

|

||||

rustc 1.35.0-nightly (474e7a648 2019-04-07)

|

||||

binary: rustc

|

||||

commit-hash: 474e7a6486758ea6fc761893b1a49cd9076fb0ab

|

||||

commit-date: 2019-04-07

|

||||

host: x86_64-unknown-linux-gnu

|

||||

release: 1.35.0-nightly

|

||||

LLVM version: 8.0

|

||||

```

|

||||

|

||||

上記の出力は `x86_64` の Linux によるものです。`host` は `x86_64-unknown-linux-gnu` です。これには CPU アーキテクチャ(`x86_64`)、ベンダー(`unknown`)、OS(`Linux`)、そして [ABI] (`gnu`)が含まれています。

|

||||

|

||||

[ABI]: https://en.wikipedia.org/wiki/Application_binary_interface

|

||||

|

||||

ホストの triple 用にコンパイルすることで、Rust コンパイラとリンカは、デフォルトで C ランタイムを使用する Linux や Windows のような基盤となる OS があると想定し、それによってリンカエラーが発生します。なのでリンカエラーを回避するために、基盤となる OS を使用せずに異なる環境用にコンパイルします。

|

||||

|

||||

このようなベアメタル環境の例としては、`thumbv7em-none-eabihf` target triple があります。これは、[組込みシステム][embedded]を表しています。詳細は省きますが、重要なのは `none` という文字列からわかるように、 この target triple に基盤となる OS がないことです。このターゲット用にコンパイルできるようにするには、 rustup にこれを追加する必要があります:

|

||||

|

||||

[embedded]: https://en.wikipedia.org/wiki/Embedded_system

|

||||

|

||||

```bash

|

||||

rustup target add thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

これにより、この target triple 用の標準(およびコア)ライブラリのコピーがダウンロードされます。これで、このターゲット用にフリースタンディングな実行可能ファイルをビルドできます:

|

||||

|

||||

```bash

|

||||

cargo build --target thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

`--target` 引数を渡すことで、ベアメタルターゲット用に実行可能ファイルを[クロスコンパイル][cross compile]します。このターゲットシステムには OS がないため、リンカは C ランタイムをリンクしようとせず、ビルドはリンカエラーなしで成功します。

|

||||

|

||||

[cross compile]: https://en.wikipedia.org/wiki/Cross_compiler

|

||||

|

||||

これが私達の OS カーネルを書くために使うアプローチです。`thumbv7em-none-eabihf` の代わりに、`x86_64` のベアメタル環境を表す[カスタムターゲット][custom target]を使用することもできます。詳細は次のセクションで説明します。

|

||||

|

||||

[custom target]: https://doc.rust-lang.org/rustc/targets/custom.html

|

||||

|

||||

### リンカへの引数

|

||||

|

||||

ベアメタルターゲット用にコンパイルする代わりに、特定の引数のセットをリンカにわたすことでリンカエラーを回避することもできます。これは私達がカーネルに使用するアプローチではありません。したがって、このセクションはオプションであり、選択肢を増やすために書かれています。表示するには以下の「リンカへの引数」をクリックしてください。

|

||||

|

||||

<details>

|

||||

|

||||

<summary>リンカへの引数</summary>

|

||||

|

||||

このセクションでは、Linux、Windows、および macOS で発生するリンカエラーについてと、リンカに追加の引数を渡すことによってそれらを解決する方法を説明します。実行可能ファイルの形式とリンカは OS によって異なるため、システムごとに異なる引数のセットが必要です。

|

||||

|

||||

#### Linux

|

||||

|

||||

Linux では以下のようなエラーが発生します(抜粋):

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: /usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x12): undefined reference to `__libc_csu_fini'

|

||||

/usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x19): undefined reference to `__libc_csu_init'

|

||||

/usr/lib/gcc/../x86_64-linux-gnu/Scrt1.o: In function `_start':

|

||||

(.text+0x25): undefined reference to `__libc_start_main'

|

||||

collect2: error: ld returned 1 exit status

|

||||

```

|

||||

|

||||

問題は、デフォルトで C ランタイムの起動ルーチンがリンカに含まれていることです。これは `_start` とも呼ばれます。`no_std` attribute により、C 標準ライブラリ `libc` のいくつかのシンボルが必要となります。なので、リンカはこれらの参照を解決できません。これを解決するために、リンカに `-nostartfiles` フラグを渡して、C の起動ルーチンをリンクしないようにします。

|

||||

|

||||

Cargo を通してリンカの attribute を渡す方法の一つに、`cargo rustc` コマンドがあります。このコマンドは `cargo build` と全く同じように動作しますが、基本となる Rust コンパイラである `rustc` にオプションを渡すことができます。`rustc` にはリンカに引数を渡す `-C link-arg` フラグがあります。新しいビルドコマンドは次のようになります:

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-arg=-nostartfiles

|

||||

```

|

||||

|

||||

これで crate を Linux 上で独立した実行ファイルとしてビルドできます!

|

||||

|

||||

リンカはデフォルトで `_start` という名前の関数を探すので、エントリポイントとなる関数の名前を明示的に指定する必要はありません。

|

||||

|

||||

#### Windows

|

||||

|

||||

Windows では別のリンカエラーが発生します(抜粋):

|

||||

|

||||

```

|

||||

error: linking with `link.exe` failed: exit code: 1561

|

||||

|

|

||||

= note: "C:\\Program Files (x86)\\…\\link.exe" […]

|

||||

= note: LINK : fatal error LNK1561: entry point must be defined

|

||||

```

|

||||

|

||||

"entry point must be defined" というエラーは、リンカがエントリポイントを見つけられていないことを意味します。Windows では、デフォルトのエントリポイント名は[使用するサブシステム][windows-subsystems]によって異なります。`CONSOLE` サブシステムの場合、リンカは `mainCRTStartup` という名前の関数を探し、`WINDOWS` サブシステムの場合は、`WinMainCRTStartup` という名前の関数を探します。デフォルトの動作を無効にし、代わりに `_start` 関数を探すようにリンカに指示するには、`/ENTRY` 引数をリンカに渡します:

|

||||

|

||||

[windows-subsystems]: https://docs.microsoft.com/en-us/cpp/build/reference/entry-entry-point-symbol

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-arg=/ENTRY:_start

|

||||

```

|

||||

|

||||

引数の形式が異なることから、Windows のリンカは Linux のリンカとは全く異なるプログラムであることが分かります。

|

||||

|

||||

これにより、別のリンカエラーが発生します:

|

||||

|

||||

```

|

||||

error: linking with `link.exe` failed: exit code: 1221

|

||||

|

|

||||

= note: "C:\\Program Files (x86)\\…\\link.exe" […]

|

||||

= note: LINK : fatal error LNK1221: a subsystem can't be inferred and must be

|

||||

defined

|

||||

```

|

||||

|

||||

このエラーは Windows での実行可能ファイルが異なる [subsystems][windows-subsystems] を使用することができるために発生します。通常のプログラムでは、エントリポイント名に基づいて推定されます。エントリポイントが `main` という名前の場合は `CONSOLE` サブシステムが使用され、エントリポイント名が `WinMain` である場合には `WINDOWS` サブシステムが使用されます。`_start` 関数は別の名前を持っているので、サブシステムを明示的に指定する必要があります:

|

||||

|

||||

This error occurs because Windows executables can use different [subsystems][windows-subsystems]. For normal programs they are inferred depending on the entry point name: If the entry point is named `main`, the `CONSOLE` subsystem is used, and if the entry point is named `WinMain`, the `WINDOWS` subsystem is used. Since our `_start` function has a different name, we need to specify the subsystem explicitly:

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-args="/ENTRY:_start /SUBSYSTEM:console"

|

||||

```

|

||||

|

||||

ここでは `CONSOLE` サブシステムを使用しますが、`WINDOWS` サブシステムを使うこともできます。`-C link-arg` を複数渡す代わりに、スペースで区切られたリストを引数として取る `-C link-args` を渡します。

|

||||

|

||||

このコマンドで、実行可能ファイルが Windows 上で正しくビルドされます。

|

||||

|

||||

#### macOS

|

||||

|

||||

macOS では次のようなリンカエラーが発生します(抜粋):

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: entry point (_main) undefined. for architecture x86_64

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

このエラーメッセージは、リンカがデフォルト名が `main` (いくつかの理由で、macOS 上ではすべての関数の前には `_` が付きます) であるエントリポイントとなる関数を見つけられないことを示しています。`_start` 関数をエントリポイントとして設定するには、`-e` というリンカ引数を渡します:

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-args="-e __start"

|

||||

```

|

||||

|

||||

`-e` というフラグでエントリポイントとなる関数の名前を指定できます。macOS 上では全ての関数には `_` というプレフィックスが追加されるので、`_start` ではなく `__start` にエントリポイントを設定する必要があります。

|

||||

|

||||

これにより、次のようなリンカエラーが発生します:

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: dynamic main executables must link with libSystem.dylib

|

||||

for architecture x86_64

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

macOS は[正式には静的にリンクされたバイナリをサポートしておらず][does not officially support statically linked binaries]、プログラムはデフォルトで `libSystem` ライブラリにリンクされる必要があります。これを無効にして静的バイナリをリンクするには、`-static` フラグをリンカに渡します:

|

||||

|

||||

[does not officially support statically linked binaries]: https://developer.apple.com/library/archive/qa/qa1118/_index.html

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-args="-e __start -static"

|

||||

```

|

||||

|

||||

これでもまだ十分ではありません、3つ目のリンカエラーが発生します:

|

||||

|

||||

```

|

||||

error: linking with `cc` failed: exit code: 1

|

||||

|

|

||||

= note: "cc" […]

|

||||

= note: ld: library not found for -lcrt0.o

|

||||

clang: error: linker command failed with exit code 1 […]

|

||||

```

|

||||

|

||||

このエラーは、macOS 上のプログラムがデフォルトで `crt0` ("C runtime zero") にリンクされるために発生します。これは Linux 上で起きたエラーと似ており、`-nostartfiles` というリンカ引数を追加することで解決できます:

|

||||

|

||||

```bash

|

||||

cargo rustc -- -C link-args="-e __start -static -nostartfiles"

|

||||

```

|

||||

|

||||

これで 私達のプログラムを macOS 上で正しくビルドできます。

|

||||

|

||||

#### ビルドコマンドの統一

|

||||

|

||||

現時点では、ホストプラットフォームによって異なるビルドコマンドを使っていますが、これは理想的ではありません。これを回避するために、プラットフォーム固有の引数を含む `.cargo/config.toml` というファイルを作成します:

|

||||

|

||||

```toml

|

||||

# in .cargo/config.toml

|

||||

|

||||

[target.'cfg(target_os = "linux")']

|

||||

rustflags = ["-C", "link-arg=-nostartfiles"]

|

||||

|

||||

[target.'cfg(target_os = "windows")']

|

||||

rustflags = ["-C", "link-args=/ENTRY:_start /SUBSYSTEM:console"]

|

||||

|

||||

[target.'cfg(target_os = "macos")']

|

||||

rustflags = ["-C", "link-args=-e __start -static -nostartfiles"]

|

||||

```

|

||||

|

||||

`rustflags` には `rustc` を呼び出すたびに自動的に追加される引数が含まれています。`.cargo/config.toml` についての詳細は[公式のドキュメント][official documentation]を確認してください。

|

||||

|

||||

[official documentation]: https://doc.rust-lang.org/cargo/reference/config.html

|

||||

|

||||

これで私達のプログラムは3つすべてのプラットフォーム上で、シンプルに `cargo build` のみでビルドすることができるようになります。

|

||||

|

||||

#### 私達はこれをすべきですか?

|

||||

|

||||

これらの手順で Linux、Windows および macOS 用の独立した実行可能ファイルをビルドすることはできますが、おそらく良い方法ではありません。その理由は、例えば `_start` 関数が呼ばれたときにスタックが初期化されるなど、まだ色々なことを前提としているからです。C ランタイムがなければ、これらの要件のうちいくつかが満たされない可能性があり、セグメンテーション違反(segfault)などによってプログラムが失敗する可能性があります。

|

||||

|

||||

もし既存の OS 上で動作する最小限のバイナリを作成したいなら、`libc` を使って `#[start]` attribute を[ここ][no-stdlib]で説明するとおりに設定するのが良いでしょう。

|

||||

|

||||

[no-stdlib]: https://doc.rust-lang.org/1.16.0/book/no-stdlib.html

|

||||

|

||||

</details>

|

||||

|

||||

## 概要

|

||||

|

||||

最小限の独立した Rust バイナリは次のようになります:

|

||||

|

||||

`src/main.rs`:

|

||||

|

||||

```rust

|

||||

#![no_std] // Rust の標準ライブラリにリンクしない

|

||||

#![no_main] // 全ての Rust レベルのエントリポイントを無効にする

|

||||

|

||||

use core::panic::PanicInfo;

|

||||

|

||||

#[no_mangle] // この関数の名前修飾をしない

|

||||

pub extern "C" fn _start() -> ! {

|

||||

// リンカはデフォルトで `_start` という名前の関数を探すので、

|

||||

// この関数がエントリポイントとなる

|

||||

loop {}

|

||||

}

|

||||

|

||||

/// この関数はパニック時に呼ばれる

|

||||

#[panic_handler]

|

||||

fn panic(_info: &PanicInfo) -> ! {

|

||||

loop {}

|

||||

}

|

||||

```

|

||||

|

||||

`Cargo.toml`:

|

||||

|

||||

```toml

|

||||

[package]

|

||||

name = "crate_name"

|

||||

version = "0.1.0"

|

||||

authors = ["Author Name <author@example.com>"]

|

||||

|

||||

# the profile used for `cargo build`

|

||||

[profile.dev]

|

||||

panic = "abort" # disable stack unwinding on panic

|

||||

|

||||

# the profile used for `cargo build --release`

|

||||

[profile.release]

|

||||

panic = "abort" # disable stack unwinding on panic

|

||||

```

|

||||

|

||||

このバイナリをビルドするために、`thumbv7em-none-eabihf` のようなベアメタルターゲット用にコンパイルする必要があります:

|

||||

|

||||

```bash

|

||||

cargo build --target thumbv7em-none-eabihf

|

||||

```

|

||||

|

||||

あるいは、追加のリンカ引数を渡してホストシステム用にコンパイルすることもできます:

|

||||

|

||||

```bash

|

||||

# Linux

|

||||

cargo rustc -- -C link-arg=-nostartfiles

|

||||

# Windows

|

||||

cargo rustc -- -C link-args="/ENTRY:_start /SUBSYSTEM:console"

|

||||

# macOS

|

||||

cargo rustc -- -C link-args="-e __start -static -nostartfiles"

|

||||

```

|

||||

|

||||

これは独立した Rust バイナリの最小の例にすぎないことに注意してください。このバイナリは `_start` 関数が呼び出されたときにスタックが初期化されるなど、さまざまなことを前提としています。**そのため、このようなバイナリを実際に使用するには、より多くの手順が必要となります**。

|

||||

|

||||

## 次は?

|

||||

|

||||

[次の記事][next post]では、この独立したバイナリを最小限の OS カーネルにするために必要なステップを説明しています。カスタムターゲットの作成、実行可能ファイルとブートローダの組み合わせ、画面に何か文字を表示する方法について説明しています。

|

||||

|

||||

[next post]: @/second-edition/posts/02-minimal-rust-kernel/index.md

|

||||

@@ -1,519 +0,0 @@

|

||||

+++

|

||||

title = "A Freestanding Rust Binary"

|

||||

weight = 1

|

||||

path = "freestanding-rust-binary"

|

||||

date = 2018-02-10

|

||||

|

||||

[extra]

|

||||

chapter = "Bare Bones"

|

||||

+++

|

||||

|

||||

The first step in creating our own operating system kernel is to create a Rust executable that does not link the standard library. This makes it possible to run Rust code on the [bare metal] without an underlying operating system.

|

||||

|

||||

[bare metal]: https://en.wikipedia.org/wiki/Bare_machine

|

||||

|

||||

<!-- more -->

|

||||

|

||||

This blog is openly developed on [GitHub]. If you have any problems or questions, please open an issue there. You can also leave comments [at the bottom]. The complete source code for this post can be found in the [`post-01`][post branch] branch.

|

||||

|

||||

[GitHub]: https://github.com/phil-opp/blog_os

|

||||

[at the bottom]: #comments

|

||||

[post branch]: https://github.com/phil-opp/blog_os/tree/post-01

|

||||

|

||||

<!-- toc -->

|

||||

|

||||

## Introduction

|